4 reasons automated data quality monitoring is vital for data observability

Data observability is a trending topic right now because of its ability to tackle data quality in complex data architectures (like data mesh and data fabric). Companies using these distributed landscapes usually have some governance concepts or principles they want to keep central. Even beyond these new data quality management blueprints, any large organization with distributed data teams benefits from achieving great data observability.

Data observability offers what we call a “self-service data infrastructure,” a tool so intuitive and beneficial that your distributed teams will adopt it eagerly and willingly – instead of thrusting it upon them. This will allow the standardization of data management and quality practices and provide you with an overview of your data system’s health as a whole while also keeping your teams autonomous.

Still, you need to be mindful of how you build your data observability solution. While volume, schema, anomaly detection, and freshness monitoring seem to be naturally automated, what about data quality monitoring? With the challenges that data observability is set to fix, data quality needs to be automated too. Let’s discuss why.

The critical components of data observability:

Data observability measures your company’s ability to understand the state of your data based on available information, such as data quality issues, anomalies, or schema changes. To achieve optimal data observability, you need a collection of tools that will deliver this understanding of your data. These include a business glossary, AI, DQ rules, and Cataloging/discovery.

Once you have these tools in place, you’ll have in-depth information about key characteristics in your data, such as volume, lineage, anomalies, DQ, and schema. This information will help you monitor your data system and receive alerts when anything changes or a potential data issue occurs.

The goals of data observability and how to achieve them

Before we get into the specifics of automated data quality and observability, let’s remind ourselves of what data observability is set to achieve:

- Quickly identify and solve data problems.

- Automate the monitoring of the whole data stack

- Onboard decentralized team quickly to monitor systems in a self-service way

- Collect as much information about the data stack to get a holistic picture of data health

- Ensuring the reliability of data pipelines which helps build trust in data throughout the company.

Achieving these goals in the distributed, decentralized data environment requires a high degree of automation. That is why AI-based solutions are so important. With as much as a click of a button, they let you collect a wide range of information about your data stack, such:

- Metadata anomalies (unexpected changes in a number of null values, a drastic change in the mean value, etc.)

- Record-level data anomalies

- Transactional data anomalies

- Infrastructure anomalies (volume of data, schema changes, and freshness alerts)

The beautiful advantage of AI-based monitoring is that it requires no initial setup and can be taught to improve its accuracy, like any supervised ML model.

This kind of monitoring is essential for achieving data observability. What about data quality monitoring, another critical component of data observability? While pure AI-based techniques are easily scalable, traditional data quality monitoring is not.

The problem with traditional data quality monitoring

Traditional, or manual, data quality monitoring relies on producing custom implementations of the same rule for different data sources or, in the best-case scenario, mapping reusable rules to specific tables and attributes manually.

In extensive enterprise data landscapes spanning hundreds or thousands of data sources (sometimes across different countries and continents), this approach doesn’t scale. So while part of the signal collection for data observability is highly automated, the other important part is the opposite.

What is automated data quality?

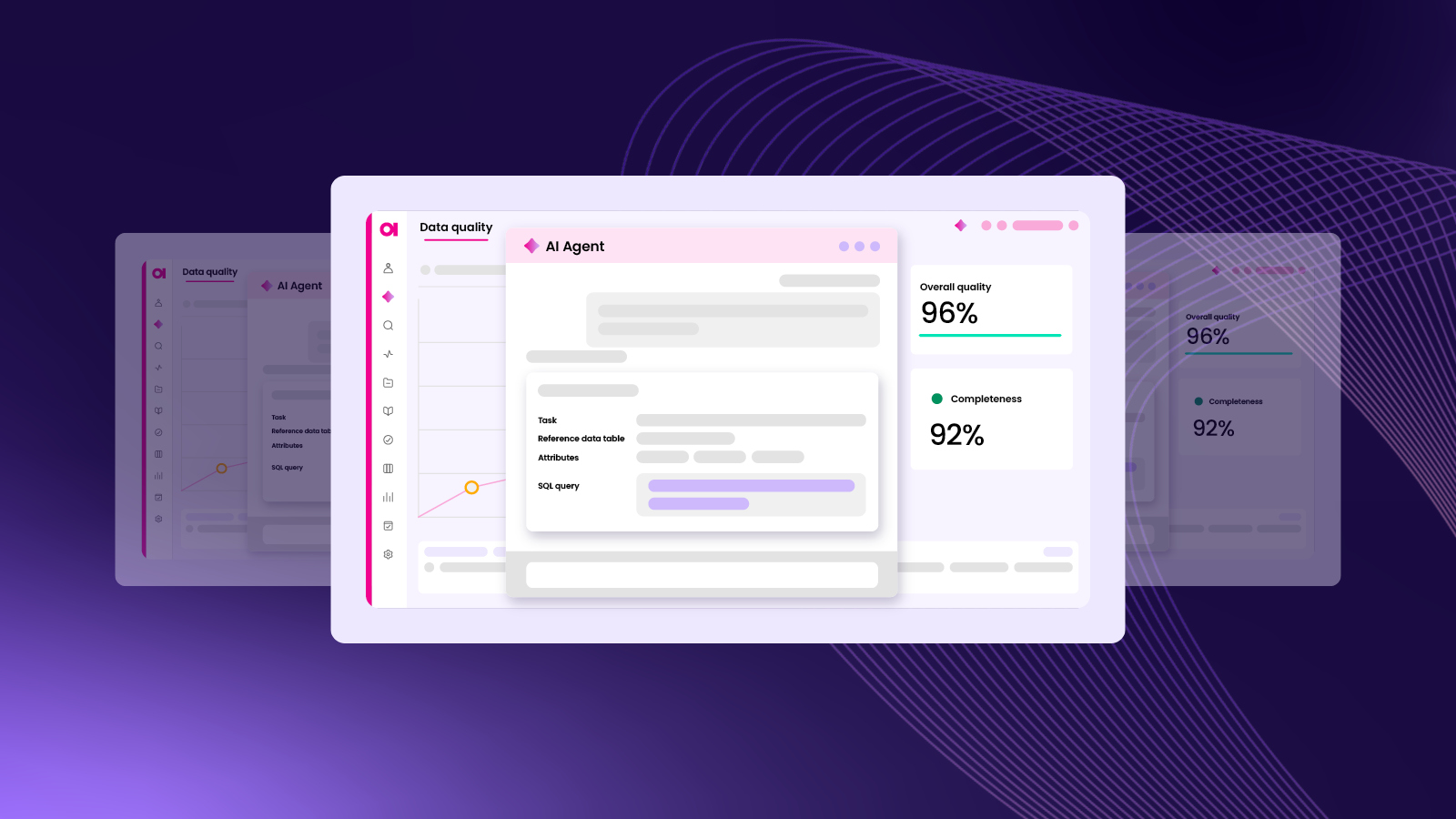

Automated DQ combines AI and rule-based approaches to automate all aspects of data quality: configuration, measurement, and providing data. By combing the data catalog, central rule library, business glossary, and data profiling, it’s able to automatically:

- Discover data domains such as names, addresses, product codes, insurance numbers, account balances, etc.

- Map data quality rules to specific business domains.

- Provide metadata discovery for data profiling and classification.

- Continuously review data domain definitions, DQ rules, and AI suggestions for newly discovered data.

| Manual data quality monitoring | Automated data quality monitoring |

| Manually mapping of rules | Metadata-driven mapping of rules |

| Uncontrolled rule management | Centralized rule management |

| Non-reusable DQ rules | Reusable DQ rules |

| Non-scalable | Highly scalable |

Why automated data quality is vital for data observability

By this point, it might obvious why automating data quality monitoring is important of optimal data observability workflows. However, let’s go on and spell out how it helps achieve the goals of data observability that we mentioned earlier.

#1 Enables the automation of critical data quality controls

It’s clear that data quality monitoring is critical for getting data observability right, it’s even more critical to automate it, just like other parts of the data observability equation. For many enterprises with clearly defined data quality rules defined by data governance and business teams, not having data quality monitoring automated would seriously cripple their ability to have a holistic view of their data health.

#2 Easy set-up will make your teams happy to adopt it

One of the most attractive aspects of any observability solution is its relatively easy setup on source systems. Automated DQ makes that even easier by making the creation of rules and set up of AI systems (like anomaly detection) incredibly easy to do. This helps achieve that “self-service” architecture we described in the introduction. Once in place, automated DQ can also deliver results as flexibly as you need, providing results at any level: from a single table to an entire business domain or data source.

#3 Provides a repeatable framework for decentralized teams

All configurations, rules, and subroutines are reusable and centrally defined in a rule library. This makes it much easier for teams to keep the same rules and implement centralized policies without needing to rewrite/reconfigure them over and over again. Ultimately this means that new teams and systems can be onboarded much faster.

Imagine using manual DQ in a decentralized environment. You would need someone on each distributed team (or a department dedicated) to code those rules for each DQ rule/system you introduce. This would be incredibly time-consuming and require a lot more manpower.

Automated DQ requires fewer working hours to maintain and configure. Developers don’t need to focus on configuring DQ rules for their team and its needs. You also won’t need to spend countless hours on repetitive processes like linking tables to domains, adding notes, or endless code maintenance.

#4 Makes data observability truly scalable

Another goal of observability is scaling your data systems. As your distributed teams grow in size and quantity, you’ll need a DQ solution that can adapt and grow with your system. Automated DQ allows your observability to grow uninhibited by providing the benefits we mentioned above: saving time, having reusable configurations, and requiring fewer resources to set up/maintain. Its adaptability also means it will be able to handle any current and future data sources and types.

Get started with data observability

An automated data quality platform is a fundamental feature of our data observability solution. Set up is easy. Simply connect your source system to the observability dashboard and get DQ results instantly.